Over the weekend, I setup a frontend and a proxy backend for multiple AI LLM models.

I used two awesome open source projects. Shoutout to the developers:

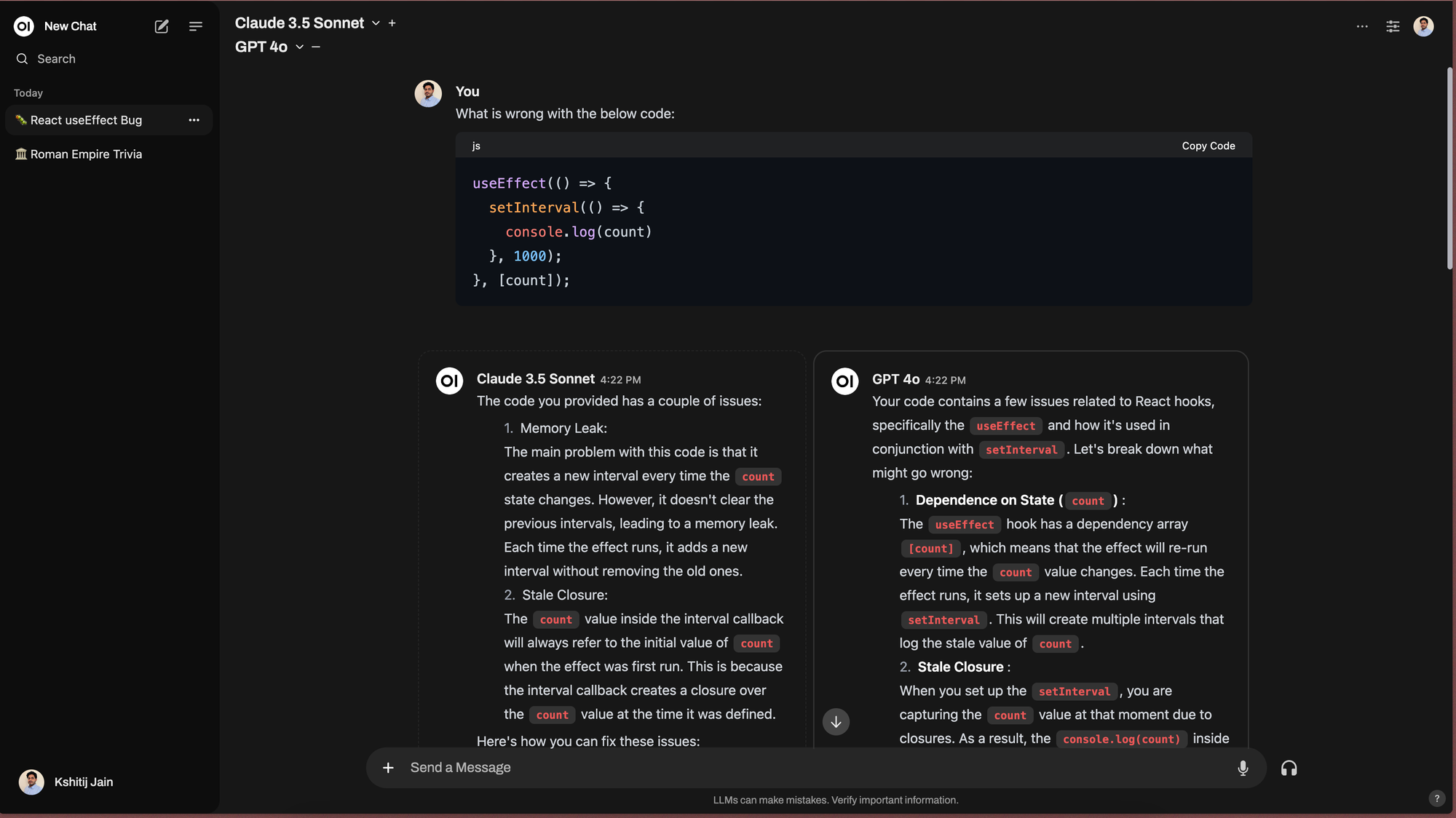

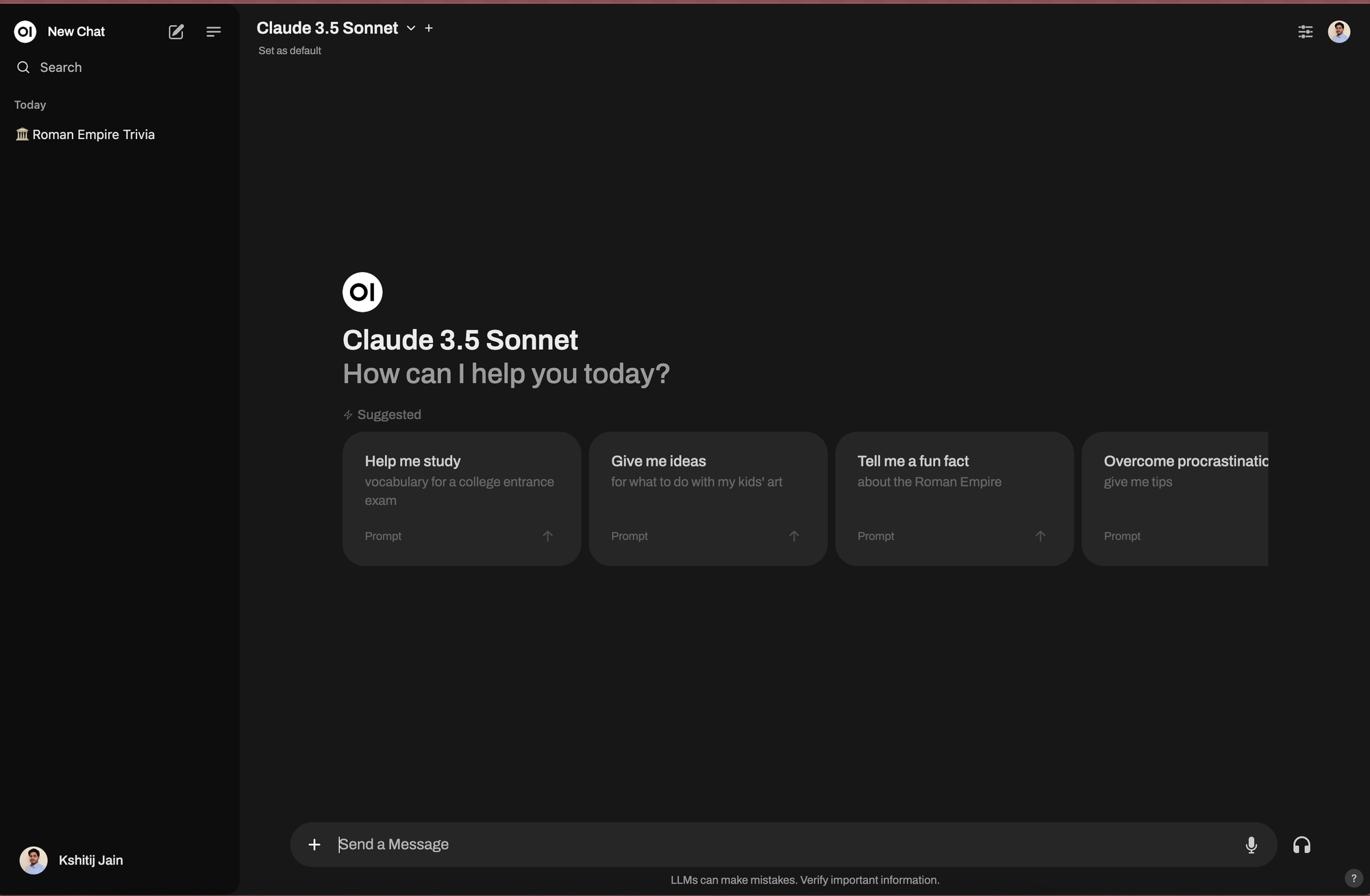

- Open WebUI: https://github.com/open-webui/open-webui - For frontend

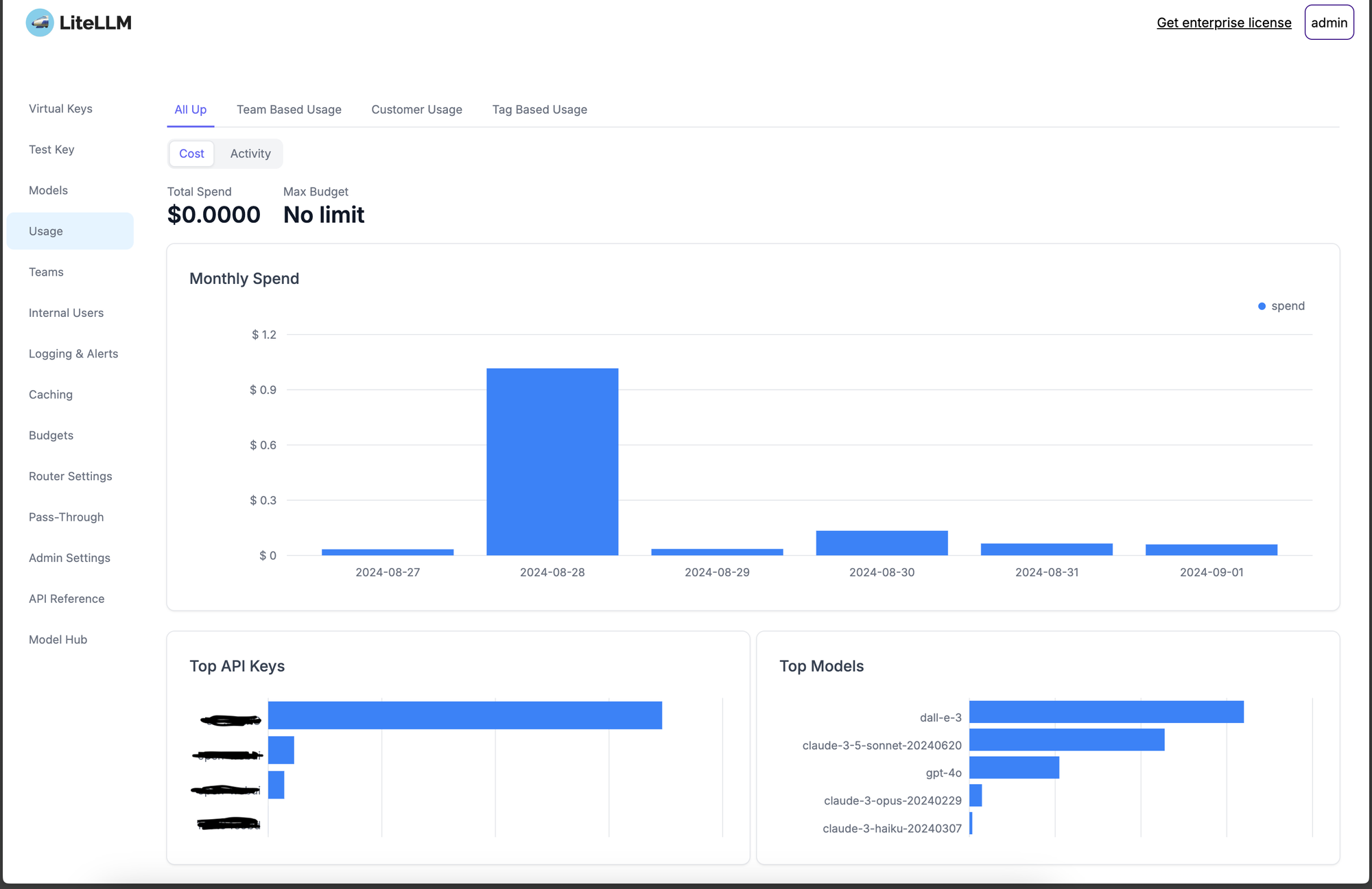

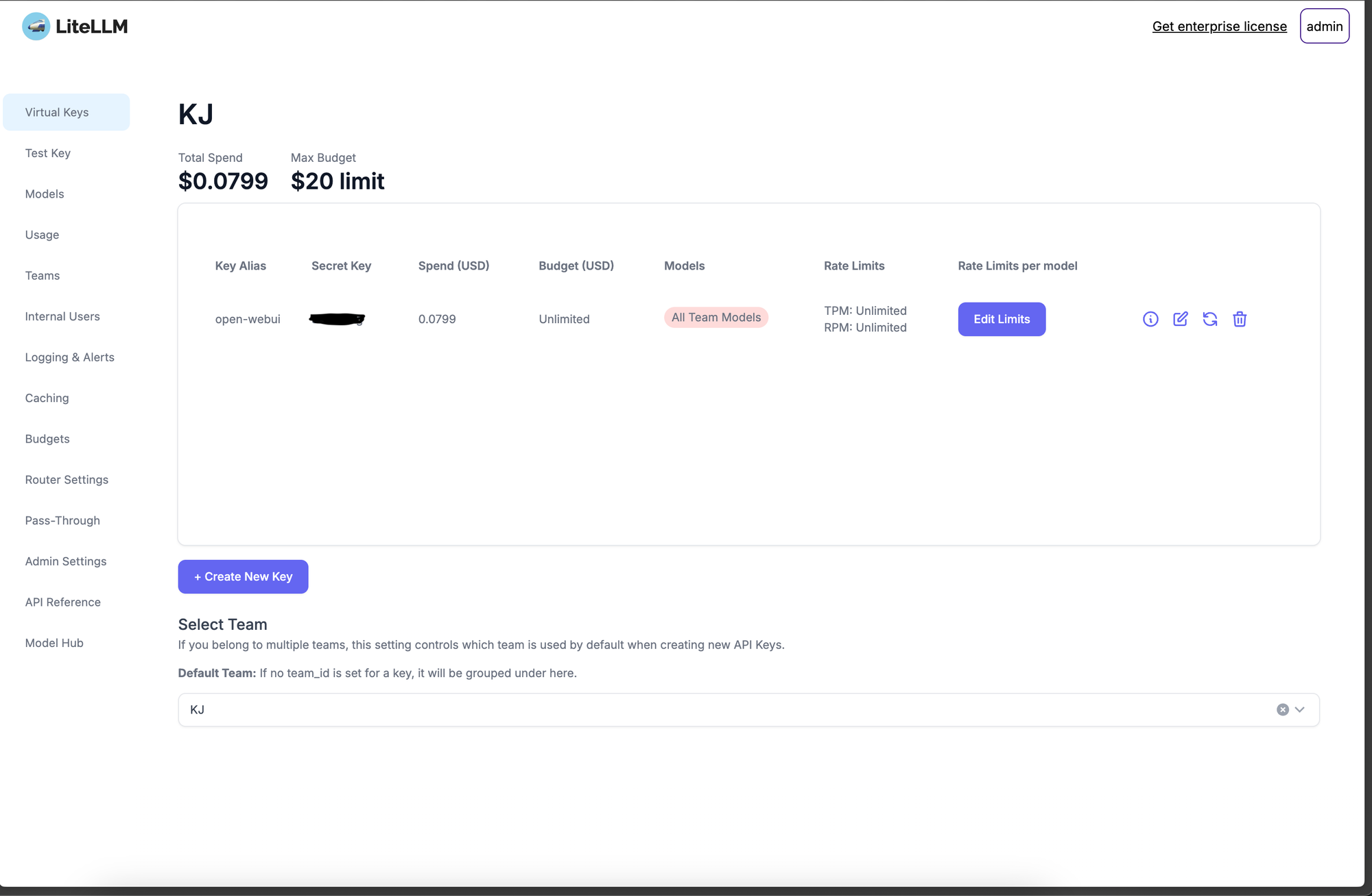

- LiteLLM: https://github.com/BerriAI/litellm - For the proxy backend

This enables me to:

- Hit multiple models (GPT 4o, Claude, etc), from a single frontend instead of jumping multiple sites. Even compare the responses from multiple models for a single prompt.

- A single dashboard to analyse the usage and costs across all the models.

- Setup a single budget across all models.

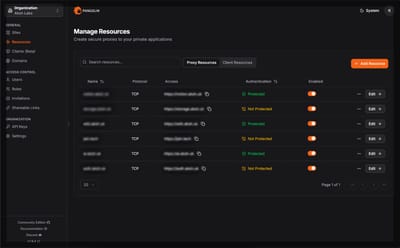

I self-hosted this setup on a Hetzner VPS.

Screenshots:

Next Steps

Self host some open source LLMs like Llama.